In continuing our ongoing series, this piece within the blog series will describe the basics of querying and modifying data in MongoDB with a focus on the basics needed for the Data Vault load as well as query patterns.

In contrast to the tables used by relational databases, MongoDB uses a JSON-based document data model. Thus, documents are a more natural way to represent data as a single structure with related data embedded as sub-documents and arrays collapses what is otherwise separated into parent-child tables linked by foreign keys in a relational database. You can model data in any way that your application demands – from rich, hierarchical documents through to flat, table-like structures, simple key-value pairs, text, geospatial data, and the nodes as well as edges used in graph processing.

Though, in order to speed up queries, data stored in documents can be indexed on arbitrary attributes:

- Primary Index exists in every collection, the _id attribute.

- Compound Indexes allow the indexing against multiple keys in the document.

- MultiKey Indexes are used for array values.

- Wildcard Indexes auto-index all matching fields, including sub-documents and arrays.

- Text Indexes support for text searches with additional Full-Text Search capabilities based on Lucene in MongoDB Atlas.

- GeoSpatial Indexes for spatial geometries.

- Hashed Indexes for sharding, i.e. horizontal scaling.

Indexes leverage additional features to either support advanced use cases or reduce the index size as:

- Unique Indexes ensure that a value is not duplicated.

- Partial Indexes allow indexes on subsets of data based on expressions.

- Sparse Indexes only index documents which have the given field.

- TTL Indexes delete a document when it has expired.

- Case Insensitive Indexes support searching for data.

This being the case, the ability to model data in complex structures require a powerful language for CRUD operations.

For that fact, the MongoDB Query Language (MQL) allows one to create expressive queries against poly structured documents such as “Find anyone with a phone number starting with ‘1-212*’ “ or “Check if the person with number ‘555-444-333’ is on the ‘do not call’ list”.

There are also means for inserting, updating and inserting data, i.e. if a document does not exist it will be inserted, which are not covered in this article. To see the documentation for further details, please click here.

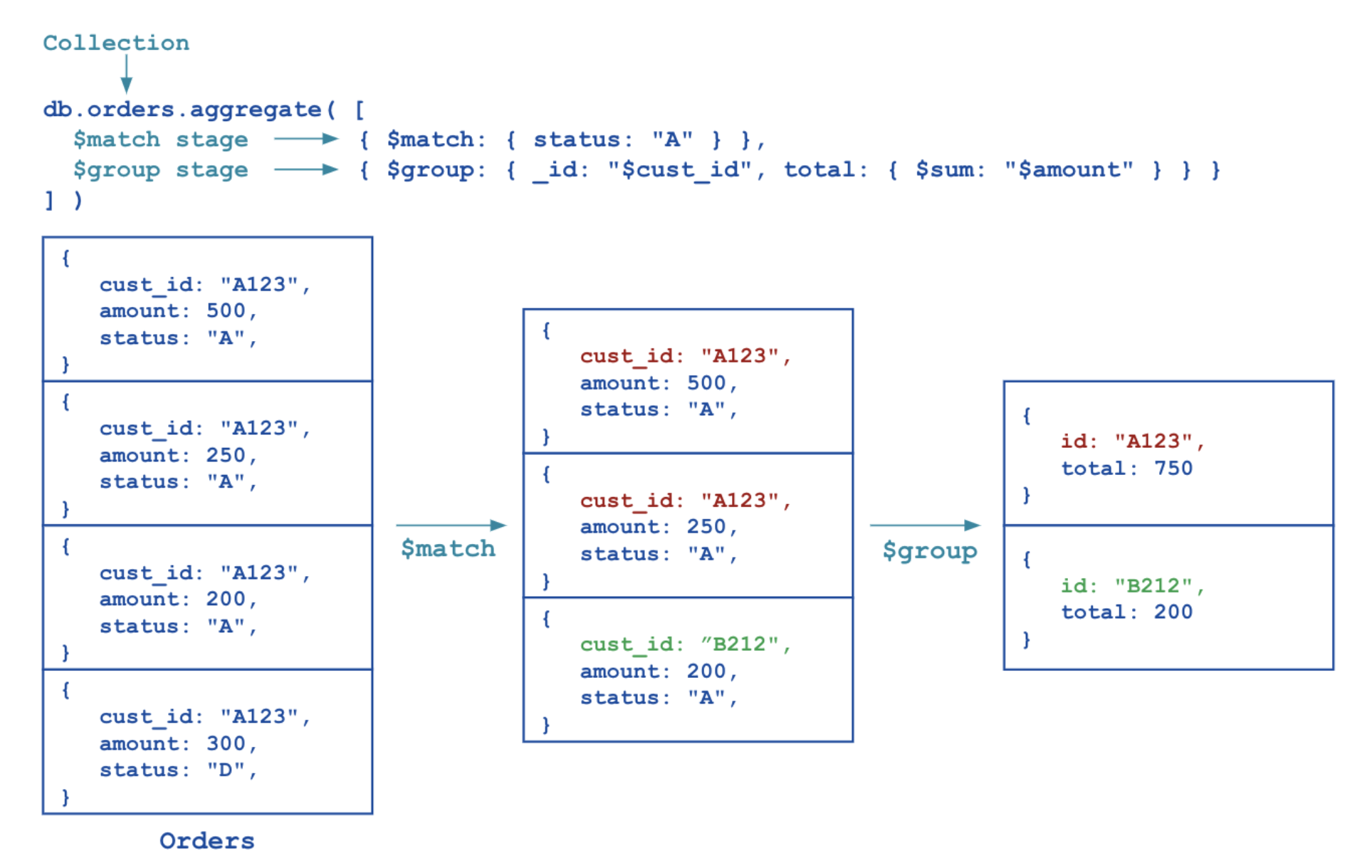

The latest release, MongoDB 4.2, also supports the usage of Aggregation Pipeline Stages in update statements as seen within this document. Aggregation Pipelines are advanced data processing pipelines used for data transformations and analytics. That being so, documents enter a multi-stage pipeline that transforms the documents into aggregated results. That requires a bit of legwork and thus an aggregation pipeline consists of stages with each stage transforming the documents as they pass through the pipeline. Pipeline stages do not need to produce one output document for every input document; e.g., some stages may generate new documents or filter out documents entirely.

The image below illustrates an example aggregation pipeline with two stages on the orders collection, that of the $match stage filters the documents by the status field and passes to the next stage those documents that have a status equal to “A”. The $group stage groups the documents by the cust_id field to calculate the sum of the amount for each unique cust_id.

Please note that pipeline stages can appear multiple times in a single pipeline.

For a list of all available stages, see Aggregation Pipeline Stages.

The following stages are used in the data vault patterns:

- $match: Filters the documents to pass only the documents that match the specified condition(s) to the next pipeline stage.

- $project: Passes along the documents with the requested fields to the next stage in the pipeline. The specified fields can be existing fields from the input documents or newly computed fields.

- $unwind: Deconstructs an array field from the input documents to output a document for each element. Each output document is the input document with the value of the array field replaced by the element.

- $group: Groups documents by some specified expression and outputs to the next stage a document for each distinct grouping. The output documents contain an _id field which contains the distinct group by key. The output documents can also contain computed fields that hold the values of some accumulator expressions such as min/max/avg/sum/etc, all grouped by the $group’s _id field.

- $lookup: Performs a left outer join to an unsharded collection in the same database to filter in documents from the “joined” collection for processing. For each input document, the $lookup stage adds a new array field whose elements are the matching documents from the “joined” collection. The $lookup stage passes these reshaped documents to the next stage.

In addition, the stages $out and $merge can be used to write the result of an aggregation pipeline back into new or existing collections.

A multitude of operators exists to modify data of documents (https://docs.mongodb.com/manual/reference/operator/aggregation/): mathematical, array modifiers, conditions, date operations, string modification, type conversions, regular expressions, and trigonometry.

Stay tuned – the next post in our series describes how to use aggregation pipelines to load hubs. If you want to learn more about querying data and using aggregation pipelines in MongoDB – please refer to the MongoDB University courses: M001: MongoDB Basics und M121: The MongoDB Aggregation Framework.

Questions? Comments?

We’d love to hear from you in the comment section below!

Wie Sie Updates und Support erhalten

Bitte senden Sie Anfragen und Funktionswünsche an [email protected].

Für Anfragen zu Data Vault-Schulungen und Schulungen vor Ort wenden Sie sich bitte an [email protected] oder registrieren Sie sich unter www.scalefree.com.

Um die Erstellung von Visual Data Vault-Zeichnungen in Microsoft Visio zu unterstützen, wurde eine Schablone implementiert, die zum Zeichnen von Data Vault-Modellen verwendet werden kann. Die Schablone ist erhältlich bei www.visualdatavault.com.