The subsidiary of a financial institution developed a Data Vault automation tool implemented as a Wherescape solution that handles data. Though, with that increase in data the customer needed an optimization of the data treatment.

Über den Kunden

Problemstellung

The provider wanted a model that fit the individual requirements in a more efficient way to support the parent company.

The solution wasn’t able to support this use case due to multiple reasons:

- The client uses a standardized model for several data sources

- Increasing the amount of data led to crucial performance and scalability issues

- Workflow wasn’t able to handle huge data files and complex data sources which led to slower processes and higher costs

Die Herausforderung

The client wants to have a reworked Wherescape solution to handle a huge amount of data to accelerate business processes

- Due to a certain lack of knowledge, the current solution didn’t fit the customers needs. The model wasn’t efficient enough

- A deep technical knowledge/expertise in this area was needed since the current solution was a standardized implementation which didn’t fit the individual requirements of the customer

Die Lösung

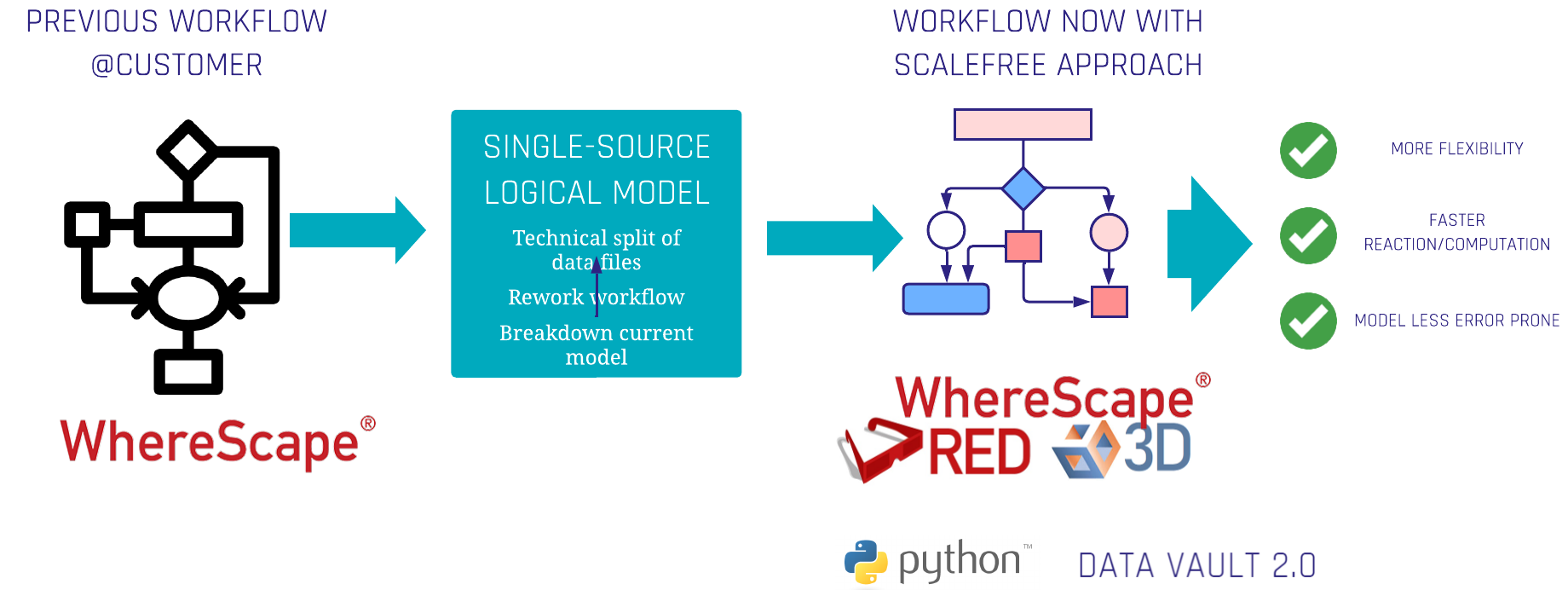

- Rework of the current Wherescape Workflow for incoming data sources

- Integration of a single-source logical model

- Breakdown of a huge model which leads to less errors since the dependencies were split to get a 1:1 relationship (1 data source to 1 model)

- Technical split of CSV data files → was directly integrated into the Wherescape workflow.

Konkrete Ergebnisse für den Kunden

With the new workflow, the developers were able to:

- React faster and more flexibly to incoming requirements of the parent company

- Get a reduction of development time through faster computation of data

- Have a less error prone model which leads to reduction of time and costs

Verwendete Technologien

- Wherescape 3D

- Wherescape Red

- Python

- Data Vault 2.0