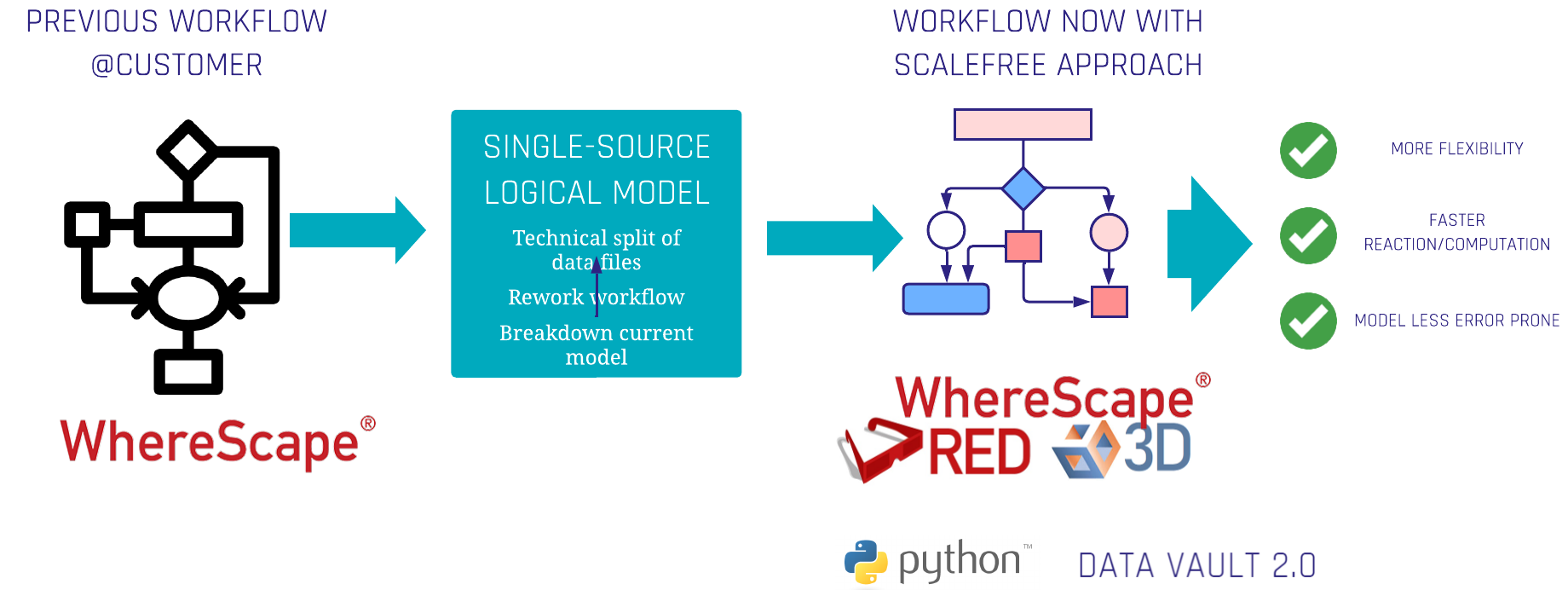

The subsidiary of a financial institution developed a Data Vault automation tool implemented as a Wherescape Workflow that handles data. With a continuous increase in data, the customer needed an optimization of the data treatment.

About the Client

Problem Statement

The solution wasn’t able to support this use case due to multiple reasons:

- The client uses a standardized model for several data sources

- Increasing the amount of data led to crucial performance and scalability issues

- Workflow wasn’t able to handle huge data files and complex data sources which led to slower processes and higher costs

The Challenge

- Due to a certain lack of knowledge, the current solution didn’t fit the customers needs. The model wasn’t efficient enough

- A deep technical knowledge/expertise in this area was needed since the current solution was a standardized implementation which didn’t fit the individual requirements of the customer

The Solution

- Rework of the current Wherescape Workflow for incoming data sources

- Integration of a single-source logical model

- Breakdown of a huge model which leads to less errors since the dependencies were split to get a 1:1 relationship (1 data source to 1 model)

- Technical split of CSV data files → was directly integrated into the Wherescape workflow.

Tangible Results for the Client

- React faster and more flexibly to incoming requirements of the parent company

- Get a reduction of development time through faster computation of data

- Have a less error prone model which leads to reduction of time and costs

Technologies used

Trung Ta

Senior Consultant

Phone: +49 511 87989342

Mobile: +49 170 7431870

Free Initial Consultation