A sub-company of one of the leading media companies in Germany wanted to upscale their internal processes and take advantage of cloud technologies. To do so, they want to migrate their architecture to the Google Cloud Platform.

About the Client

Problem Statement

The major problems can be summarized as:

- Long runtimes of data loading processes resulting in delays for reports and analysis

- Performance and storage limited by the provided hardware unless additional hardware was bought

- Lack of security and role management

The Challenge

- Create a timeline of the migration process to know when and which data will be available for better project management

- Ensure that the current reports can be run in the new environment

- Optimize the current solution while adhering to Data Vault 2.0 standards

The above is the reason why they sought an external developer with deep knowledge of the given data warehouse approach and technology stack who could also offer a consultation regarding Data Vault 2.0.

The Solution

- Jointly develop an automated dbt-model generation tool that shortened data loading processes significantly

- Migrate parts of the architecture to Google Cloud Platform using BigQuery as the primary data warehouse

- Deactivate parts in the legacy system to allow the cloud solution to manage

Build analysis and reports using Looker which were already used in the daily business processes

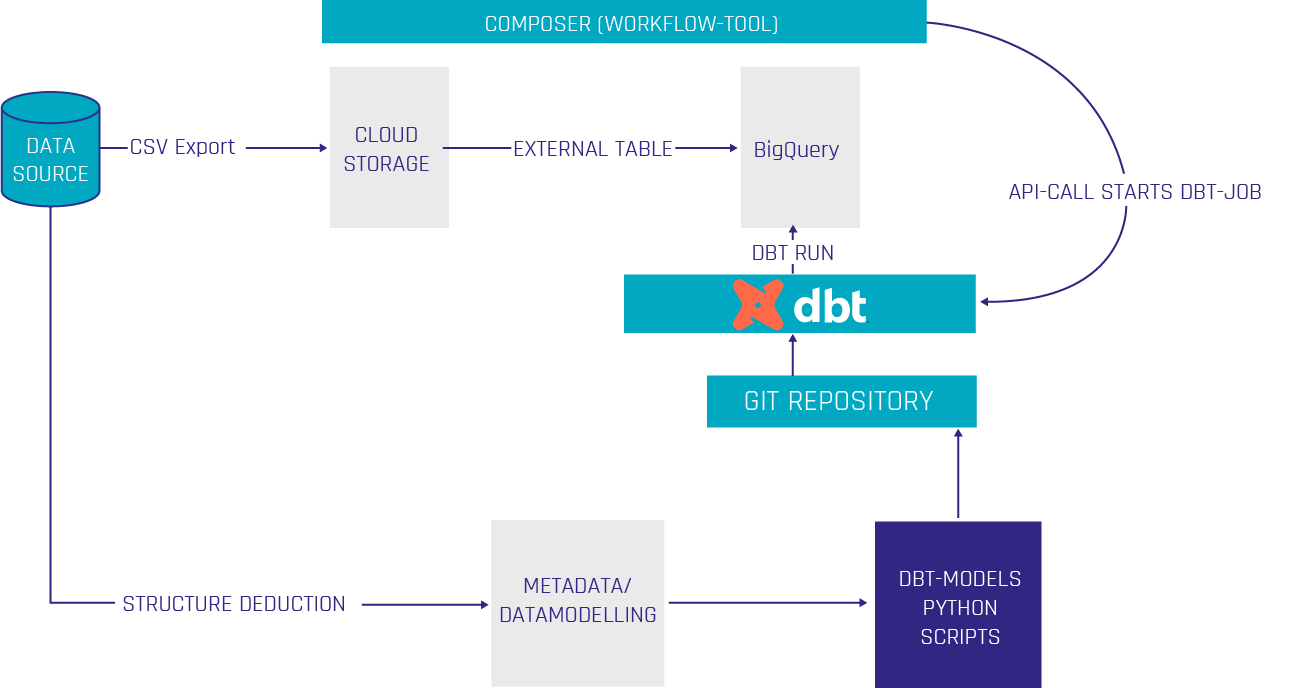

Snapshot of the current architecture solution

Tangible Results for the Client

- Implementation of faster ETL-process using DBT (two hour runtime → three minutes)

- Reduced deployment costs by implementing Data Vault 2.0

- Allowed integration of parts of the daily business already running in the new environment

Technologies used

- Google Cloud Platform (BigQuery, Cloud Storage, Composer)

- DBT

- Microsoft SQL Server

- Python

- Git

- SAP

Trung Ta

Senior Consultant

Phone: +49 511 87989342

Mobile: +49 170 7431870

Free Initial Consultation